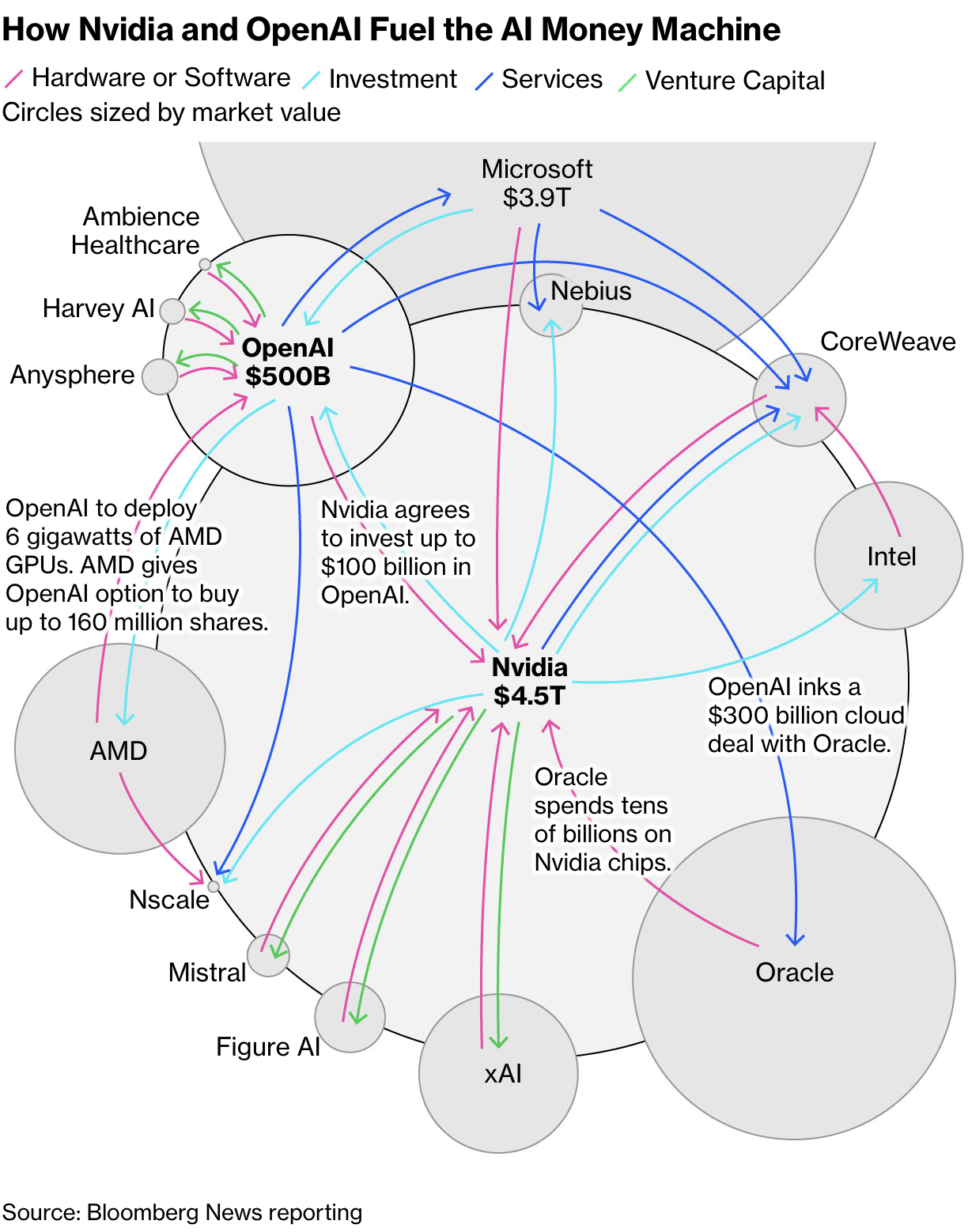

Look at this diagram from Bloomberg and tell me what you see:

At first glance, it's a network of investments between tech companies. OpenAI at the center, connected to Microsoft, Nvidia, Oracle, AMD. Arrows showing money flowing in different directions. Hardware deals, cloud services, equity stakes, and venture capital.

But stare at it longer and something starts to feel wrong. The arrows don't just flow; they loop. Microsoft invests billions in OpenAI, which commits $250 billion to buy Azure cloud services from Microsoft. Nvidia agrees to invest up to $100 billion in OpenAI, which will deploy Nvidia's systems. AMD gives OpenAI warrants for 160 million shares in exchange for chip deployment commitments. Oracle's cloud infrastructure hosts Microsoft's AI services while OpenAI links a $300 billion cloud deal with Oracle.

The money isn't flowing through the system. It's circulating within it.

This is what Bloomberg called "circular deals boosting a $1 trillion AI boom". Seven companies sending trillions back and forth to each other, adding over $1 trillion to their collective market capitalization in the process. The entire US economy right now is essentially seven companies holding each other up at gunpoint, and the question nobody seems comfortable asking is: which meaning of "holding up" applies here?

The Perfect Storm of Rational Decisions

What makes this so fascinating from a systems perspective is that every single decision makes perfect sense.

Satya Nadella, Microsoft's CEO, has over 95% of his compensation tied to performance stock awards. His personal wealth depends directly on Microsoft's stock price, which depends on the market believing Microsoft dominates the AI future. Committing $250 billion to OpenAI's Azure services while taking a 27% equity stake isn't reckless, it's the rational move to secure that narrative. The announcement itself drove billions in market cap gains, immediately justifying the long-term commitment through short-term stock performance.

Larry Ellison, owning 41% of Oracle, saw his net worth surge $100 billion in a single day when Oracle announced a $455 billion AI compute backlog. That backlog is future revenue spread over years, but the stock response was instant. For someone whose wealth is tied to market perception, aggressive infrastructure announcements are individually rational regardless of whether the revenue materializes on schedule.

Nvidia, facing the strategic risk that its biggest customers might become its competitors or shift to alternatives, chooses to invest up to $100 billion in OpenAI while guaranteeing deployment of its systems. This transforms from transactional sales risk into secured future demand. If you're Nvidia, turning capital into guaranteed multi-year commitments is rational competitive positioning.

OpenAI's board, caught between their original nonprofit mission and the brutal capital requirements of AGI development, accepts equity-linked infrastructure deals because they have no choice. Building world-class AI infrastructure requires hundreds of billions of dollars. You can't fund that through mission-driven donations. Accepting Microsoft's 27% stake in exchange for $250 billion in cloud commitments is rational survival.

And fund managers, who identify AI as their number one portfolio risk according to CNBC surveys, continue heavy allocation to these same stocks because the career risk of underperformance is immediate and personal, while the risk of participating in a collective crash is diffused and shared. Missing the rally tanks your performance metrics this quarter; being caught in a bubble that takes everyone down is defensible because everyone else was caught too.

This is rational dysfunction at scale: each actor making the correct decision given their incentive structure, collectively producing a system that looks increasingly fragile the more you examine it.

What Makes This Different From Past Bubbles

The instinct is to compare this to previous infrastructure booms. The railway mania of the 1840s, the dot-com bubble, the fiber optic glut of the late 1990s. Each involved massive overinvestment driven by speculation about transformative technology, each ended in spectacular crashes, and each left behind valuable infrastructure that eventually justified the investment even if individual companies failed.

But there's a critical difference with AI infrastructure: depreciation timelines.

Railway land rights and track beds were durable physical assets. Even after companies went bankrupt, the infrastructure remained and could be repurposed. The fiber optic cables laid during the telecom boom seemed wasteful when only 5% of installed capacity was being used in 2001, but that cable eventually powered the high-speed internet revolution. The physical infrastructure had decades of residual utility.

GPU clusters obsolesce in 2-3 years. AI chips are software-dependent and technology-specific. When depreciation-adjusted for useful life, academic analysis shows the current AI capital expenditure boom already exceeds both the railway peak and the dot-com bubble relative to GDP. And unlike those previous cycles, if demand expectations aren't met, the stranded assets have minimal salvage value.

The Magnificent 7 tech companies are planning $5.2 trillion in investments over five years. Annual data center and compute investment hit $600 billion this year alone, nearly doubling in two years. But this isn't building railroads that will carry freight for a century. This is building compute capacity that might be obsolete before the loans are repaid.

The 95% Problem

Here's a number that should worry everyone: 95% of enterprise AI initiatives fail.

Not "don't meet ambitious targets." Fail completely. Companies are prioritizing AI at the board level, with 75% of C-suite leaders making it a strategic focus and 71% of businesses deploying generative AI in some form. But the implementation success rate is abysmal.

The circular investment structure is building capacity for universal adoption. The bet is that the successful 5% of initiatives will scale fast enough to absorb all the infrastructure built for the failing 95%. That's not a conservative assumption; that's front-running demonstrated demand by years, potentially decades.

Microsoft's $250 billion Azure commitment to OpenAI assumes sustained exponential growth in AI compute consumption. Nvidia's $100 billion infrastructure guarantee assumes OpenAI successfully deploys 10 gigawatts of systems. Oracle's $455 billion backlog assumes AI demand maintains current growth trajectories indefinitely. Each assumption is individually optimistic; collectively, they require everything to go right simultaneously.

The Market's Shifting Mood

Something changed in late 2024. For two years, investors rewarded nearly any company announcing aggressive AI capital expenditure. The announcement itself was enough to drive stock prices higher, regardless of demonstrated return on investment. This was the "speculation phase" of the bubble cycle, where narrative mattered more than results.

That era appears to be ending. In Q4 2024, Meta's stock plunged 12%, losing $140 billion in market value despite strong quarterly results. The market wasn't reacting to poor performance; it was reacting to increased capital expenditure without sufficient proof of monetization. The same day, Alphabet rallied 5%, gaining $130 billion, specifically because it demonstrated that its aggressive capex was coupled with clear monetization success: 28% year-over-year growth in Google Cloud with signs of adoption by AI companies.

This divergence signals entry into what analysts call the "discrimination phase" of the bubble cycle. Investors are no longer rewarding capacity announcements alone; they're demanding proof of economic return. When the market stops rewarding the behavior that feeds the circular loop, capital flows cease, and the system that depends on continuous reinvestment begins to fracture.

The shift from rewarding announcements to demanding results is exactly when infrastructure bubbles historically begin to deflate. Not with dramatic crashes, but with gradually tightening scrutiny on returns.

Who Benefits From Which Story

This is where understanding the Story/Storyteller/Audience framework becomes essential. The same underlying facts support completely different narratives depending on who's telling the story and what they need you to believe.

The "Virtuous Flywheel" Story: This is the narrative from participants. The circular investments aren't problematic; they're structurally necessary alignment of long-term interests. Unlike the transactional, debt-based vendor financing of the dot-com era (which was speculative), these equity-linked deals address confirmed, voracious compute demand. The temporary appearance of circularity reflects a supply-demand imbalance being corrected through strategic partnerships. This story serves executives justifying massive capital commitments to their boards and shareholders.

The "Systemic Bubble" Story: This is the narrative from skeptics and financial analysts. Seven companies circulating trillions among themselves, adding over a trillion in paper wealth that dwarfs actual revenue, building capacity for demand that hasn't materialized, exhibiting all the classic patterns of infrastructure manias. This story serves financial advisors warning clients about concentration risk and journalists seeking to explain market fragility.

The "Necessary Infrastructure" Story: This is the narrative from technology optimists. Yes, there's overinvestment and yes, many individual projects will fail, but that's how transformative infrastructure always gets built. The railway and fiber optic comparisons aren't warnings; they're validations. This story serves venture capitalists justifying continued deployment of capital and entrepreneurs seeking funding.

Each story is told by someone with clear incentives. Executives need the virtuous flywheel narrative to justify their compensation-driving decisions. Analysts need the systemic bubble narrative to demonstrate their sophisticated risk assessment. Technology optimists need the necessary infrastructure narrative to maintain momentum for their portfolio companies or funding needs.

Bloomberg, writing for institutional investors making actual capital allocation decisions, presents the circularity concern not as sensationalism but as risk assessment. Their audience needs to understand the mechanical fragility even if they're compelled to participate. The framing isn't "AI is bad" or "bubble will crash"; it's "here's the precise mechanism of systemic concentration risk you're navigating."

Compare that to how the same information appears in tech-focused media (celebratory innovation narrative), mainstream news (job displacement fears), or retail investment content (either hype or panic). Same underlying facts, completely different framing based on what serves each audience's needs.

The Gold Standard Problem

While all this AI investment circulates among seven companies, something else is happening in parallel: gold prices are breaking records, surpassing $2,900 per ounce.

Institutional investors are simultaneously betting on transformative high-growth technology and hedging against severe financial downturn. Gold is viewed by strategic analysts as the "optimal hedge for the unique combination of stagflation, recession, debasement and U.S. policy risks" expected in 2025-2026.

This internal market contradiction tells you everything about how fragile participants know this structure is. They can't afford not to participate in the AI boom because the career risk of missing it is too high. But they're also quietly positioning for the possibility that the circular investment loop collapses under its own weight.

The fund managers who identify AI as their top portfolio risk continue buying the stocks while simultaneously accumulating gold. Both actions are individually rational given their constraints. The contradiction reveals the system-level understanding that something is wrong even as individual incentives compel participation.

The Incentive Quadrant Application

This is a perfect demonstration of what I call the Incentive Quadrant: mapping decisions across Me/Us and Now/Later dimensions. Every actor in this system is pursuing Me/Later dominance (securing long-term market position, proprietary AGI access, competitive advantage) but executing through Me/Now mechanisms (maximizing current performance-based compensation, avoiding career risk from underperformance, driving quarterly stock prices).

The drive for long-term strategic advantage compels executives to secure capacity at any cost, leading to reciprocal equity-for-compute deals that lock in capital flows. But this strategic drive is implemented through personal incentives where massive capital expenditure announcements immediately boost performance-based equity awards, justifying further investment and maintaining the high-growth narrative.

For fund managers, the Me/Later calculation (building career reputation, generating returns) conflicts with Me/Now pressures (avoiding underperformance this quarter). The resolution is to participate in a system they recognize as fragile because the immediate career cost of being wrong alone exceeds the diffused cost of being wrong together.

This creates a structural mandate to overspend across the ecosystem. The financial and career cost of missing the AI wave is perceived as far higher than the eventual collective risk of participating in an industry-wide correction. The mechanism ensures the system continues expanding until the market enters sustained discrimination phase, at which point capital flows cease and systemic repricing begins.

What Comes Next

The uncomfortable truth is that nobody knows. The bulls might be right: this could be genuine infrastructure buildout for a transformative technology, and the temporary appearance of circularity might resolve as demand catches up to capacity. The bears might be right: this could be a classic bubble where individually rational choices create collectively unsustainable patterns, leading to a correction that destroys capital while leaving behind some residual useful infrastructure.

What we can say with confidence: the current structure is fragile. It depends on continuous reinvestment, sustained demand growth, enterprise AI adoption rates far exceeding current success rates, and market patience with capacity buildout ahead of monetization. If any of these assumptions break, the circular loop that's holding up the US economy might suddenly be holding it up in the other sense of that phrase.

The question isn't whether individuals are making smart choices. They are. The question is whether smart individual choices can produce collective outcomes that undermine the system itself. And if the pattern from railways to fiber optic has taught us anything, it's that infrastructure bubbles aren't prevented by individual rationality. They're resolved through correction, sometimes gradually through discrimination phase, sometimes catastrophically through sudden repricing.

The diagram from Bloomberg doesn't show a conspiracy or manipulation. It shows a system where everyone's incentives align to produce continued expansion regardless of underlying fundamentals. That's not evil; that's just how markets work when the structure itself rewards momentum over scrutiny.

We're watching it happen in real-time. Seven companies, trillions circulating, $1 trillion in added market value, and the entire US economy depending on whether this is artificial intelligence building the future or artificial money building a bubble. The fact that we can't tell the difference yet is probably the most important thing to understand.

Further Reading:

For more on how individually rational decisions create collective dysfunction, see When Being Rational Makes Everything Worse: Understanding the Incentive Quadrant. To understand how we've been through versions of this panic before, read Worried AI Will Steal Your Job? Lessons from the Luddites.